I went over why I use ADLS Gen2 with Databricks and how to set up a service principal to mediate permissions between them. Now we’ll configure the connection between Databricks and the storage account.

Config

This part is simple and mostly rinse-and-repeat. You need to set up a map of config values to use which

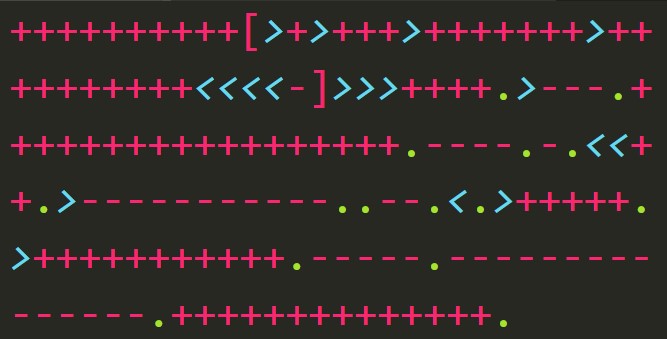

configs = {

"fs.azure.account.auth.type": "OAuth",

"fs.azure.account.oauth.provider.type": "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider",

"fs.azure.createRemoteFileSystemDuringInitialization": "true",

"fs.azure.account.oauth2.client.id": "<SP application ID>",

"fs.azure.account.oauth2.client.secret": dbutils.secrets.get(scope = "gen2", key = "databricks workspace"),

"fs.azure.account.oauth2.client.endpoint": "https://login.microsoftonline.com/<AAD tenant ID>/oauth2/token"

}

There’s nothing too magical going on here. I’ve never had a reason to change lines 2-4 though, honestly, I’ve never needed Databricks to tell the storage account to create the file system so I could probably do away with that line.

Lines 5-7 might need some tweaks depending on how many service principals, tenants, and secrets you use. I work in the same tenant for everything and I use the same service principal for all of the assorted ETL stuff I work in. Lastly, I have a one-to-one relationship between secrets and Databricks workspaces. With that arrangement, I can use a single config map for all of my storage accounts.

The fill-in-the-blanks stuff threw me for a loop the first time. I always have trouble figuring out which settings or text boxes want which IDs.

Client ID

"fs.azure.account.oauth2.client.id" is the application ID of your service principal.

- Go to the Azure portal

- Go to Azure Active Directory

- Select “App registrations” in the left blade

- Select your service principal from the list of registrations

- Copy the “Application (client) ID”

Client endpoint

"fs.azure.account.oauth2.client.endpoint" is the same basic URL every time, you just plug in your Azure Active Directory tenant ID where the code snippet specifies.

- Go to the Azure portal

- Go to Azure Active Directory

- Select “Properties” in the left blade

- Copy the “Directory ID”

Client secret

"fs.azure.account.oauth2.client.secret" is the secret you made in the Azure portal for the service principal. You can type this in

Managing secrets is a whole process on its own. I’ll dive in to that soon.

Storage location

This was another “duh” moment for me once I sorted it out. My problem was figuring out the protocol for the URL.

"abfss://file-system@storageaccount.bfs.core.windows.net/"

- You can use

abfsinstead ofabfssif you want to forego SSL file-systemis the name you gave a file system within a storage accountstorageaccountis the name of the storage accountfile-systembelongs to

Recap

This time, we created the Spark configuration. We didn’t persist or use the config yet: look for an upcoming installment on mounting storage in Databricks.