Databricks is one of my favorite tools. It’s a managed Apache Spark platform used for data engineering and data science. Before I dive in to using it, I’ll highlight some of the key features.

Jupyter is similar to Databricks in a lot of respects so, if you’re familiar with the former, I doubt this article will be informative. Keep an eye out for further installments in this series.

A full-fledged IDE

The Databricks web GUI is a first-class IDE. Files, folders, and dependencies are all navigable. Data connections, jobs, and clusters can be managed and inspected.

There are separate Users and Shared areas in the workspace. When I’m working on a team project, I like to do my prototyping, debugging, and scratch pad stuff in my Users area and, once code is ready to go to a live environment, I put it in Shared.

This is an awesome feature for collaborative development and, conveniently, means you don’t need an IDE on your local machine. It also drastically reduces the chances of a “works on my machine” situation.

Notebooks

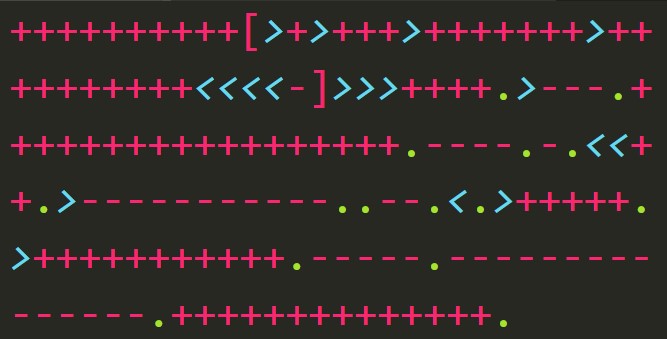

Notebooks are special files which the Databricks interface parses into command windows. The actual files are a combination of the code and markup you write in the GUI and metadata which, among other things, indicate how the commands are divided. In the notebook analogy, command windows are like pages.

Each notebook has a default programming language: one of Python, Scala, SQL, Java, or R. The window’s language can be specified using a magic command like %sql on the first line.

Languages run on separate kernels. If you create a DataFrame (a handy data abstraction) in Python, it won’t be available to commands in other languages as-is.

Version control

As a bonus, notebooks have built-in version control and can be connected to Git repositories. This comes complete with diff views between any revision and its immediate predecessor.

The built-in version control capabilities are somewhat limited when compared to Git. I definitely recommend integrating with a Git repository for a mature and flexible process.

Try it out

If you’ve been thinking of trying it out, there’s a free community edition. It’s limited to a low-end cluster and doesn’t provide certain features like Git integration, but it has all the basic stuff needed to see if Databricks is right for you.

Next up

I’ll cover practical topics like acquiring and serializing/deserializing data, transformation, and analysis. I hope to get into machine learning and visualizations too. If you like a “come learn with me” experience, look out for those.