Now you know why I use Gen2 with Databricks, my struggle with service principals, and how I configure the connection between the two. I’m finally going to mount the storage account to the Databricks file system (DBFS) and show a couple of things I do once the mount is available.

Databricks Utilities

Save a few keystrokes and access the file system utilities directly using dbfs. The method names will be familiar to those who work in the command line but fear not; it’s all fairly self-describing.

Mounting Gen2 storage

For my own sanity, I always unmount from a location before trying to mount to it.

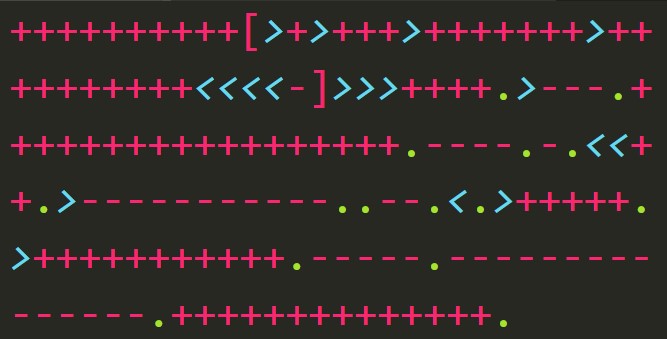

def mount(source_url, configs, mount_point):

try:

dbfs.unmount(mount_point)

except:

print("The mount point didn't have anything mounted to it.")

finally:

dbfs.mount(source=source_url,

mount_point=mount_point,

extra_configs=configs)

I went over creating the source URL and the configs map in the previous installment. The mount point is a *nix style path specification, the root is /mnt

Navigating mounted storage

The path names will not exactly match the paths in your file system which is a side effect of the name of the mount point in DBFS. Say you’ve got a storage account transactionvendors001awesome-vendor/mnt/awesome.

When navigating Gen2 in something like Azure Storage Explorer, your paths will be transactionvendors001/awesome-vendor/…/… but the equivalent in Databricks will be /mnt/awesome/…/…

A really nice feature is wildcard syntax in clients_df = spark.read.json('/mnt/awesome/clients/*.json', multiLine = True)

Series recap

I sang the praises of ADLS Gen2 storage combined with Azure Databricks, illustrated how I suck at service principals (and how to keep you from sucking at them), set up a boilerplate configuration for the Databricks handshake with storage accounts, and now actually mounted storage to DBFS.

This is all basically tax we’ve got to pay to get on the highway. It’s not fun and it doesn’t show off any of the awesome stuff Databricks can actually do per se. Stay tuned and I’ll shovel up some more exciting stuff from a novice perspective.