As part of my Cardalog web app, I’m writing an API to interact with a MongoDB using Azure Functions. In the series introduction, I set up the app’s components. In this installment, I’ll implement a Function to read all cards from the database.

I’ve got the API and UI repositories out on GitHub. This Gist contains the complete implementation I’ll reference throughout this post.

Connecting to MongoDB

First up, install the Mongo driver package with func extensions install -p MongoDB.Driver -v 2.9.3.

Since I’m using Functions, I’m not retaining any state or resources like connections to the database. The connection is easy to spin up, so there’s just a little boilerplate needed.

var client = new MongoClient("mongodb://127.0.0.1:27017");

var db = client.GetDatabase("cardalog");

var coll = db.GetCollection<BsonDocument>("cards");

Once I mature the APIs a bit, I won’t use magic strings for configuration details and I’ll harden access rights. As long as I’m prototyping, though, I’ll do it the quick and dirty way.

coll is how I’ll interact with the cards collection. For my use case, this collection is similar to a relational DB table. There are some fundamental differences worth knowing. For now, just keep in mind that the collection doesn’t have a schema.

Seeding the database

Before going further, I’ll seed the database with some dummy data I created with Mockaroo. You can use and copy the schema or run curl "https://api.mockaroo.com/api/bb6e1fd0?count=1000&key=d0846c90" > "MtG.json" in a terminal.

Once you have the mocked data, use mongoimport -d cardalog -c cards .\MtG.json to write it to the cards collection.

Be careful with the word “schema” in this context. I mentioned that Mongo is schemaless but, for Mockaroo to generate data, I had to define a schema. You can create a different Mockaroo schema to mock up data from a different card game and those can live side-by-side in the same collection.

Reading cards

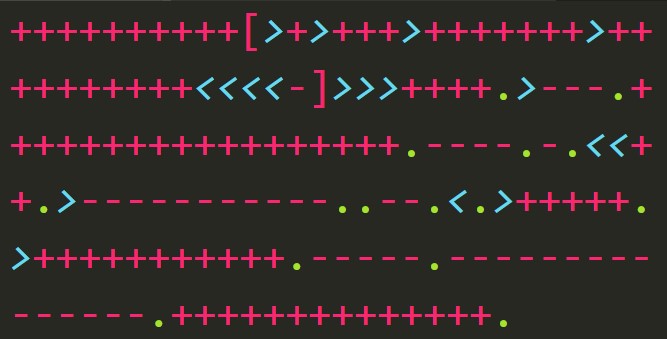

This first read implementation will be sort of naive. I want it to respond with all cards in JSON format so I’ll just create a list of BsonDocument, convert it to JSON, and ship it back to the client.

var projection = Builders<BsonDocument>.Projection.Exclude("_id");

var cardsBson = await coll

.Find(new BsonDocument())

.Project(projection).ToListAsync();

var cards = new BsonArray(cardsBson);

return (ActionResult)new OkObjectResult(cards.ToJson());

I had some trouble deserializing the auto-generated _id so I used a projection to exclude that from the results. Then I used IMongoCollection<T>.Find with no filter specified to get everything back.

cardsBson is a List<BsonDocument>. I can’t return a collection to the client, so I fit the collection into a single BsonArray object, then use ToJson which serializes the array of cards as a valid JSON string.

I intend to have thousands of cards in here so I’m going to implement paging eventually. For now, I’m happy sending everything back.

Routing the read call

Before I can move on to implementing the write Function, I want to make a couple of tweaks for the request parameter’s decoration. The Function template allows GET and POST calls and doesn’t specify the route. This is done via HttpTriggerAttribute.

[HttpTrigger(AuthorizationLevel.Anonymous, "get", "post", Route = null)]

If the route is left null, Functions will use the FunctionNameAttribute to create the URI. This first Function would then live at http://localhost:7071/api/ReadCards. I’m doing my best to adhere to REST conventions so I want to take the verb out.

Change null to "cards" and remove "post" because this Function is only for reading the collection. I now have this.

[HttpTrigger(AuthorizationLevel.Anonymous, "get", Route = "cards")]

Test it out

The code’s in place so all that’s left is to give it a whirl. From the CLI, run the app with func host start. The runner will print the URI to any Functions in the project once they’re listening. This first one listens on ReadCards: [GET] http://localhost:7071/api/cards. Fire up a browser or your favorite tool for playing with HTTP requests (I like Postman) and send a GET request to http://localhost:7071/api/cards.

Recap

I’ve got my database seeded with dummy data and implemented a basic “read everything” Function which crams all of my cards into a JSON array. I’ll need to add paging at some point and my error handling is really primitive but I’m ready to create my first UI to display my cards.