Even when just sitting at idle, Cosmos DB will rack up charges. You set the throughput as Request Units (RUs) and that power is constantly provisioned so Cosmos won’t experience “warm up time”. Naturally, you get billed by the hour.

I’ve spent more money than I had to by manually provisioning the throughput or, more often, failing to scale down during non-peak hours. For my ETL to run effectively without racking up charges, I decided to set up some programmatic scaling.

Use case: scaling before large writes

My Cosmos consumers don’t need a lot of power so I only need to ramp up the RUs for roughly a 90-minute window in the early morning when I throw a lot of writes at it. All together, I must have the following so the throughput is only increased while it’s needed.

- My load subsystem must be able to increase the throughput just before it starts loading.

- It must be able to decrease the throughput as soon as it’s done loading.

- Since Azure Data Factory orchestrates my ETL, it must be able to invoke the solution.

Cosmos APIs

I had a lot of choices for programmatically interacting with Cosmos including a RESTful API, the Azure CLI, PowerShell, and .NET. Look for other options in the Quickstarts in the official documentation.

Azure Functions as a proxy

ADF has a first-class Azure Functions Activity. Functions are a lightweight compute product, which makes them perfect for my on-demand requirement. As a managed product, I don’t have to worry about configuration beyond choosing my language

It was easiest for me to implement this in C#, so I chose the .NET library and created a .NET Core Function with an HTTP trigger.

Implementation

My implementation is stateless and is not coupled to any of my ETL subsystems. Microsoft.Azure.DocumentDB.Core is the only dependency not already bundled with the Functions App template. Ultimately, the app is plug-and-play.

Here are a couple of edited excerpts from the Function to show the most important parts. The repo is linked in the last section for your free use.

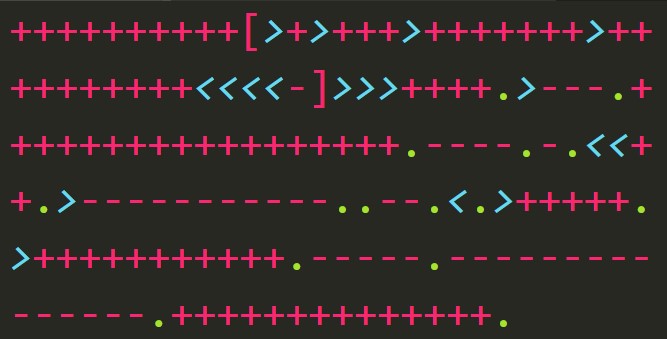

Communicating with Cosmos

Cosmos references are wired up in two stages. First, the DocumentClient (i.e. a reference to the Cosmos DB) is spun up using the Cosmos instance’s URL.

using (

var client = new DocumentClient(new Uri(dbUrl),

key,

new ConnectionPolicy { UserAgentSuffix = " samples-net/3" }))

{

client.CreateDatabaseIfNotExistsAsync(new Database { Id = dbName }).Wait();

var coll = CreateCollection(client).Result;

ChangeThroughput(client, coll).Wait();

}

CreateCollection is a helper method which gets a reference to a collection within the DB.

async Task<DocumentCollection> CreateCollection(DocumentClient client) {

return await client.CreateDocumentCollectionIfNotExistsAsync(

UriFactory.CreateDatabaseUri(dbName),

new DocumentCollection {

Id = collectionName

},

new RequestOptions {

OfferThroughput = throughput

});

}

CreateDocumentCollectionIfNotExistsAsync is part of the Cosmos .NET API. As the name implies, it conditionally creates the collection. This is necessary even if you know the collection already exists – it’s how the Function gets a reference to the collection.

Modifying the offer

The throughput is changed first by getting the existing offer for the Cosmos DB, then replacing that offer with the throughput you want.

async Task ChangeThroughput(DocumentClient client,

DocumentCollection simpleCollection)

{

var offer = client.CreateOfferQuery().Where(o =>

o.ResourceLink == simpleCollection.SelfLink)

.AsEnumerable().Single();

var newOffer = await client.ReplaceOfferAsync(

new OfferV2(offer, throughput));

}

There’s some more stuff to glue it together and some error handling I’ve added. The repo layers all of that in.

Using the Function

You’ll need to send along five values in the request headers.

- The name of the Cosmos DB

- The name of the collection

- The Cosmos’ URL

- One of the read-write keys

- The number of RUs you want to set.

- This must be a multiple of 100.

Source code

The source is on GitHub. Feel free to use it. If you have ideas for improvements, I’d love to hear them.