There are a bunch of ways to make data available but, if you’re just starting out or building a pet project, you probably want to keep it simple. If you don’t have your own data to start with, check out Kaggle, get some datasets, and start cooking.

Small data sample

Databricks is powerful – it’s made to be used on large and complex data but that doesn’t mean it’s no good for tiny data. For illustration, my samples throughout this series will use the following tiny CSVs.

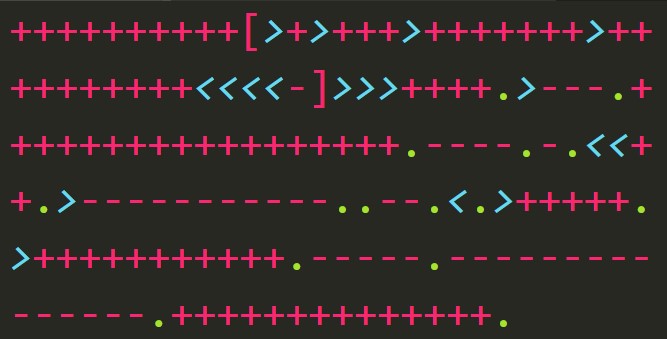

id,name,description 1,Widget,"Made from cheap plastic" 2,Gadget,

id,widget,amount,currency 1,1,0.25,USD 2,1,0.20,EUR 3,1,27,JPY 4,2,1.75,USD 5,2,1.40,EUR 6,2,189,JPY

Uploading the Data

You can save and upload that CSV, for example, as Items.csv and Pricing.csv.

The first stop is the Add Data button and Upload File interface. It’s a standard upload experience so I won’t cover it.

Once you’ve uploaded the file, it’ll give you the chance to create a table in the UI or a notebook. The notebook option spits out some boilerplate code which can create a persistent table from the file but it’s very basic. It’s a good idea to see the boilerplate but, even for a toy project, you’ll probably want to do a little more.

The important thing to remember is your files will be available at /FileStore/tables/Items.csv and /FileStore/tables/Pricing.csv.

Serializing the data

The files are now available via Databricks’ built-in storage but they still need to be read into language-level objects to be useful. If you checked the boilerplate, you saw one of the options for the reader was infer_schema.

This can be useful if you want to read in the file with very few assumptions and start tweaking the structure on the fly. I do this when I read in different datasets for every job and hand off the results to some other subsystem.

In this case, however, I’m going to use the same data across multiple notebooks using different languages.

Schema specification

These schema specify column names, types, and nullability. The pyspark.sql.types module is necessary for type specification. Read up on it to see what else it offers.

from pyspark.sql.types import StructType, StructField, StringType, IntegerType, DoubleType

items_schema = StructType([

StructField('ItemId', IntegerType(), nullable = False),

StructField('Name', StringType(), nullable = False),

StructField('Description', StringType(), nullable = True)

])

pricing_schema = StructType([

StructField('PriceId', IntegerType(), nullable = False),

StructField('ItemPriceId', IntegerType(), nullable = False),

StructField('Amount', DoubleType(), nullable = False),

StructField('Currency', StringType(), nullable = False)

])

A schema object’s structure can be arbitrarily complex. In this case, both schema are just a few columns of simple types, but it’s possible to embed structures within structures which can model semi-structured data like JSON.

Creating DataFrames

The CSV files are next read into DataFrames. This step applies the schema to the dataset and creates a language-level object we can interact with.

item_df = spark.read.csv('/FileStore/tables/Items.csv',

schema = items_schema,

header = True)

price_df = spark.read.csv('/FileStore/tables/Pricing.csv',

schema = pricing_schema,

header = True)

There isn’t much magic at play. DataFrameReader can read a bunch of stuff in addition to CSVs. The first parameter is the location of the data to read, the second is the schema I defined, and the third indicates that the first row of the file is a header.

Creating Tables

Finally, the DataFrames know how to create tables out of their data.

item_df.write.format('parquet') \

.mode('overwrite') \

.saveAsTable('items')

price_df.write.format('parquet') \

.mode('overwrite') \

.saveAsTable('pricing')

That’s all self-describing but, for reference, modes are documented. Other available formats include JSON, text, CSV, and ORC.

Recap

I essentially took the raw data and moved it as-is into tables named items and pricing. From here I can perform transformations, create visualizations, and store the data in new tables.

Check out my series on ADLS Gen2 and Azure Databricks to see one example of using Databricks as one component in a larger system.

I published a complete notebook using large(ish) datasets with wide schemas. Databricks kills those links after six months so it’ll go down eventually. Rest assured the samples in this article cover all the same stuff and are much more readable.